CRMP Process evaluation

Who is this Guidance for?

This guidance relates to the evaluation component of the CRMP Strategic Framework. It is designed to support those individuals tasked with leading, managing, and developing Community Risk Management Plans (CRMPs) in UK Fire and Rescue Services (FRSs). It provides advice on the application of evaluation techniques in relation to the development and delivery of the CRMP. It does NOT address evaluation of the impact and effectiveness of the actions drawn from the information within the CRMP. Despite this, the outcomes and impact of previous activities are a necessary starting point in developing or updating a CRMP.

Additional Guidance to complement the CRMP Framework is being developed for Evaluation of FRS Interventions and for Competencies that may be required throughout CRMP development.

This guidance should be considered in conjunction with the following NFCC Guidance.

- Data and Business Intelligence

- Defining Scope

- Equality Impact Assessment

- Stakeholder and Public Engagement.

- Hazard Identification

- Risk Analysis

Why is there a need to undertake assurance evaluation?

Assurance evaluation is a critical aspect of good project management and in the implementation of guidance, interventions and activities. It is not an add-on to CRMP, it is a key component that runs through each theme and each other component. Unfortunately, one of the reasons effective assurance evaluations are sometimes poorly constructed and carried out is that it is seen as an add-on to a process, rather than an integral part.

The consequences of this lack of emphasis and importance can be severe, and in the case of CRMP, inadequate assurance of the process may restrict and limit the effectiveness of planning. This can lead to knock-on effects, such as missing data, overlooked issues, inadequate risk mitigation, and the endurance of addressable risks within communities.

To properly undertake assurance of the process of developing the CRMP it must include the whole process from a starting point to an end point of the CRMP Framework.

Assurance activities need to be implemented appropriately and objectively from the start of the CRMP to demonstrate and develop ideas and practice. Such assurance does create an additional workload, but there are ways to simplify the process. For example, one way to ease the workload is to incorporate assurance activities into ongoing project or programme activities. This will be discussed further in Section 3.

This guidance has been produced by research experts with considerable experience of carrying out formative and summative assurance evaluation and evaluation of impacts of a CRMP, and of working closely with the Fire and Rescue Service (FRS) sector over a number of years.

In addition to this objective and independent expertise, the evaluators consulted with individuals with experience of FRS personnel (with experience of process assurance evaluations), including members of the FRS Consultation, Research and Evaluation Officers (CREO) Group.

Objectives

- To provide UK FRSs with step-by-step support to assess (through a process evaluation), the extent to which it has achieved (or is working towards achieving) the objectives of the CRMP.

- To ensure that the completeness of each theme of the CRMP Framework is verified, both as a CRMP is being developed – and again as a final review.

- To succeed in the above by providing guidance that is accessible to any member of the team developing the CRMP, no matter what previous exposure they have to evaluation techniques.

Introduction

In order to achieve these objectives, the guidance covers two main aspects: understanding good practice in process evaluation and applying good practice in relation to the CRMP development.

The guidance does not cover outcome and impact evaluation guidance in any great depth. Where references to outcome and impact evaluations are made, this is done to ensure that FRS staff understand the differences (and linkages) between outcome and impact evaluations, and a process assurance evaluation (such as developing and delivering the CRMP).

Where practicable, individual FRSs (or a partnership of multiple FRSs) may consider appointing an external evaluation expert to support the development of the CRMP and all linked processes.

In following this guidance, FRS staff will be able to either carry out a process assurance evaluation internally, or confidently commission and manage external evaluation experts to carry out a process assurance evaluation on their behalf.

For those wanting to consult more technical and theoretical guidance, reference to the Magenta Book (central government’s guidance on evaluation) can be found in the accompanying bibliography.

Acknowledgement of Shared Risks

In carrying out an evaluation of the CRMP, it will be important to acknowledge and address occasions where an identified risk is not limited to the purview of one FRS. Therefore, in considering and mitigating risk, FRS staff should assess the extent to which it is a shared risk, and which other services / organisations might need to be consulted.

Shared risks could include (but not be limited to) the following types – those which:

- Impact on / cross over into adjacent boundaries

- Involve local authorities

- Involve other emergency services

- Exhibit characteristics that might present a similar risk in other (non-adjacent) areas

- Involve other organisations due to their location and the risks attached to them – e.g., a local airport

FRS staff should consider which (if any) other parties might be involved in addressing an identified risk and enter into dialogue with those parties to consider their opinions and planning, and to develop a shared evaluation strategy of the separate and combined Risk Management Plans.

Applying this consistent approach to evaluation will ensure a more assured CRMP that can withstand scrutiny from internal and external governance, inspection, and public consultation.

Shaping the Guidance

This guidance has been designed and co-created with due regard to the following:

- Reference to existing CRMP products provided by the NFCC

- Access to individual CRMP products,

- Input resulting from consultation, discussion, and interviews with FRS staff – including those working closely on CRMPs within their own Fire and Rescue Service.

In assimilating all the data, wider information, views, and ideas provided, the guidance has been shaped to best meet FRS requirements, and with recognition of the impact and requirements of different structures and governance models for the FRS.

Central to this has been the need to ensure the information contained within is applicable to the whole of the CRMP Framework and to any UK FRS no matter the level of its resources or capacity.

This ensures consistency in its application; however, this content can also be used as a template that can be tailored for process evaluation of each component, as required.

Structure of the Guidance

The following report structure is designed to enable FRS staff to be well-placed to confidently identify (and respond to) the strengths and weaknesses within the CRMP process.

Section One covers a more in-depth outline of the objectives of this guidance, and provides answers to general evaluation questions such as ‘What is evaluation?’ and ‘What is monitoring?’ It is critical that those tasked with overseeing evaluation of the CRMP have a basic understanding of how to respond to these questions.

Section Two presents a brief description of the different types of evaluation that can be applied to projects and programmes (further detail is provided in Annexe One).

Although this evaluation is concerned with the process of developing the CRMP, it cannot be disassociated with linked disciplines such as outcome and impact evaluations, as review of past activities based on the CRMP is a starting point in reviewing the next iteration.

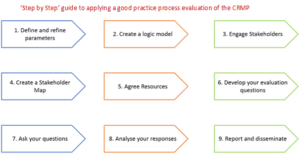

Section Three contains the ‘good practice’ that should be followed by FRS staff in evaluating their approach to, and their development of, the CRMP. This is the key section in terms of guidance. It contains all the steps necessary for the application of a robust evaluation and starts with a visual guide to the step-by-step procedures for FRS staff to follow.

At appropriate intervals within the guidance, there are:

- Learning points: These indicate specific learning that staff will acquire from the guidance.

- Action points: These will help FRS staff to identify and put into place the main process evaluation activities.

Section One: Evaluation or Monitoring?

Both evaluation and monitoring are essential in any work that intends to improve a process. They form the basis of effective measurement in the chain that travels from baseline to impact. In terms of the CRMP, it is particularly important to know the difference between evaluation and monitoring. These are often complimentary disciplines and activities, but they are not designed to cover the same aspects.

While monitoring is essential to understand, the focus of this guidance is on assurance evaluation, and so in the remainder of the document, more in-depth information is provided on evaluation than to monitoring.

Learning PointFollowing use of this section, you should have a greater understanding of what evaluation is, why you need to evaluate, and what the differences are between ‘evaluation’ and ‘monitoring’. |

What is assurance evaluation?

Assurance Evaluation seeks to justify and improve processes, interventions, projects, pilots, and products – in this case the CRMP process.

In carrying out assurance evaluations, both the process, and the outcomes and impacts can be assessed to deliver better services. All evaluation is carried out using an appropriate research methodology, and in terms of the CRMP it should seek to explain how all elements of the CRMP Strategic Framework is being (or has been) developed and delivered, what effect it is having (or has had), for whom, how, and why.

To that extent, a process evaluation of CRMP development will:

- Clearly identify that each element of the CRMP Framework has been completed

- Show what consultation and EqIA processes took place and their inputs and the responses to them for each element of the CRMP Framework

- List of lessons learned in developing and delivering the CRMP

In recognition of the many aspects to keep track of and consider, step through this guidance systematically. By the end of Section 3, it will be clear how tasks can be divided for easier management within a successful CRMP process evaluation.

What is monitoring?

Monitoring involves a process of continually capturing a wider range of relevant data that compliments the more periodic evaluations that seek to explain that data. Such monitoring provides an opportunity to indicate areas of improvement as the process evolves.

For example, business intelligence is required throughout the whole CRMP process – therefore this will require data collection and monitoring throughout; however, there will still be a need for it to be part of the process assurance evaluation. This means that data monitoring activities will be concerned with measuring results and KPIs (e.g. an appropriate number of stakeholder opportunities in an appropriate style for the relevant group, etc) , while the assurance evaluation will be concerned with the completeness and thoroughness of the process and delivery decisions made in collecting that data.

Evaluation and monitoring are complimentary but differ in the focus of the questions being asked, the frequency and scope of data collection, and what we are aiming to achieve.

Monitoring should:

- Capture data relevant to the evaluation.

- Provide checks on quality control.

- Ensure data is fit for purpose.

- Measure KPIs.

- Account for spending and provide Return on Investment information where possible.

- Record activities and outputs to keep projects on track.

It is important that monitoring is rigorous and transparent and covers the following questions:

- What data needs to be gathered to give reliable and consistent measurement against CRMP objectives?

- Who will be responsible for gathering CRMP data and intelligence (across all themes and components), and what resources are required?

- How will CRMP data be gathered and stored?

- How will CRMP data collection comply with GDPR and other data management requirements?

- How will CRMP data be verified to ensure it is accurate and consistent with the relevant requirements?

- How will CRMP data be collected and reported, when, and for whom?

- Can CRMP data be aligned with the schedule for auditing and evaluation?

- How much use can be made of existing data sources? In some cases, an external body such as a university has licensed access to data that is not able to be used externally, as the licence is strictly for research purposes. Any data used for the CRMP should be able to reference the data source, so unless the source is available, the data should not form a part of the CRMP.

To reiterate, the focus of this guidance is on assurance evaluation; the above should be considered a starting point to, and outline of, the basic principles of monitoring development of the CRMP – it is not intended to be used as a complete set of monitoring guidance. Be confident about the specific evaluation type related to this guidance.

Learning PointBy the end of this section, you should understand the different evaluation types and how they link together and be confident about the specific evaluation type related to this guidance. |

Section Two: Evaluation types

Although the focus of this guidance is on the development and application of CRMP, this section should be read in conjunction with Annexe One, which provides a brief overview of two other evaluation types (‘outcome’ and ‘impact’).

NB: While ‘outcome’ and ‘impact’ are distinct types of evaluation, evidence of outcomes and impacts (definitions in step 2) are considered within this guidance to be indicators within a process assurance evaluation.

This may be a source of potential confusion, especially to staff new to evaluation. The steps laid out in Section 3 are not intended to be used to evaluate outcomes or impacts, but evidence pertaining to both may be considered when evaluating the development and presentation of the CRMP.

Process Assurance Evaluations

Process assurance evaluations describe and assess services, activities, policies, and procedures. They provide feedback as to whether a project, programme, or intervention (in this case the CRMP process) is being implemented as intended, what barriers have been encountered, and what changes or variations to development might be required. Most importantly, they may reveal why outcomes were, or were not, achieved. This process can be visibly captured in a project’s logic model (described in step 2).

The focus of a process assurance evaluation tends to be on the types and quantities of activities and services; the beneficiaries of those services; the adherence to plans; the resources used to deliver them; the practical problems encountered; and the ways such problems were resolved.

For example, in order to secure intended outcomes and impacts within the CRMP, the activity of identifying and mitigating hazards will include the following (all of which are part of the process of delivering the CRMP):

- Consultation with stakeholders.

- The implementation and administration of a project plan.

- The collection of monitoring data.

- A range of associated inputs and expected outputs.

- Regular process reviews.

Process assurance evaluations are focused on those activities and decisions made during the development and lifetime of a process, and should seek to:

- Capture, explain and assess the steps that have led to the CRMP’s previous intended (and unintended) outputs, outcomes, and impacts.

- Complement and refine CRMP data to improve the accuracy, robustness, and usefulness of information able to be presented to decision makers to better inform resource allocation and strategic activities.

Provide a Gap Analysis of processes against the needs as identified by the CRMP.

- Establish what was delivered through the previous CRMP (to whom, when, in what form).

- Assess how to develop or improve CRMP delivery.

- Understand what CRMP development best practice might be ‘mainstreamed’ across the FRS network, in terms of sharing lessons learned and good practice.

This is accomplished through an assessment of the following:

Economy

Assessment of the cost of development of the CRMP Strategic Framework as a benchmark against other FRSs in the same Family Group and identify future opportunities for collaboration and or cost savings.

Effectiveness

The extent to which CRMP links to impact (captured in the Logic Model, as explained in step 2).

Efficiency

Contribution of the CRMP to better outcomes and outputs.

Relevance

In relation to CRMP team participants and stakeholders.

Sustainability

Contribution to future sustainability of the CRMP and contribution to wider sustainability in terms of communities, economy, and environment.

To measure the above, the process evaluation should seek to ask:

- Who is involved in the development of the CRMP? Why are these individuals or organisations involved? How are they involved? Are there any significant omissions?

- Who has been engaged and are all relevant groups represented?

- Do all individual organisational CRMP processes link together, and do they link to the expected outcomes and impacts?

- Has the process worked in the way it was expected to work? If not, in what ways and why not? What worked well? What didn’t? What recommendations can be made during the evaluation to strengthen the CRMP?

- What barriers were encountered in implementing the CRMP process? Were they overcome? How, and to what conclusion? Are there any impacts from the handling of these barriers that still need to be addressed to ensure a robust CRMP?

- How does the CRMP interact with the monitoring of data and KPIs?

- Was the resourcing right in terms of staff and budgets?

- Does the CRMP process work across all levels of staff – strategic, tactical, and operational?

- What can be improved in future iterations?

With the answers to these questions in hand, evaluators should be able to evidence and review the following:

- Success characteristics and areas of good practice.

- Processes adopted in setting up and presenting the CRMP.

- The barriers and enablers associated with setting up and presenting the CRMP.

- The expectations of stakeholders in relation to the CRMP.

- The extent to which the CRMP has met (or is working towards) objectives.

- How the assurance evaluation of the whole CRMP process aligns with other research and evaluations (either those targeted at certain aspects of the CRMP or other evaluations which touch on CRMP related activities).

- Gaps and potential gaps in the development and delivery model of the CRMP.

- How factors or elements of the current CRMP have motivated or incentivised change at an individual, departmental, or organisational level – or de-motivated or discouraged change and how have these been identified and addressed in the refresh

- How the plans for developing the CRMP were adhered to and carried out. Any related variations and the impact on systems and people should be captured and reported in the final report (any variations could lead to negative or positive impacts).

- Opportunities that have emerged

Section 3 will outline a step-by-step guide to achieving each of these points.

Section Three: Assuring the CRMP process – a ‘step by step’ guide

This section is central to the understanding and application of ‘good practice’ in terms of implementing the guidance. It is structured in an easy-to-use format and takes a step-by-step approach in describing how to apply a consistent and robust assurance evaluation across all aspects of the CRMP Strategic Framework. It is designed to equip FRS staff with the knowledge to make informed decisions on the approach to take. It does this by addressing the whole as a seamless process in which the assurance evaluation is applicable across all components of the CRMP, and where the themes and components are built into one evaluation.

The step-by-step approach can be visualised as follows:

The challenge for FRS staff will be to apply these steps to the whole CRMP in a consistent, robust manner. The skills acquired can then be transferred across themes and components if required, using the same techniques, applying the same types of questions (albeit with slight variations), and employing the same disciplined approach.

Learning PointFollowing steps one and two that follow, the difference between defining the parameters of the assurance evaluation and defining the scope of the CRMP should be clear. This should make it easier to explain what the assurance evaluation will, and will not cover, the timeframes involved, funding sources, and resource requirements (how much, who is to be involved). It will also show how to construct a simple logic model. |

Step 1: Define and Refine Parameters

This step relates to defining and developing the parameters of the assurance evaluation – or scope. It should not be confused with developing the scope of the CRMP; these are two different things, the latter being a component within the CRMP.

Defining the ‘evaluation parameters’ in this guidance focuses on setting the range of the assurance evaluation: ruling things in, and ruling things out.

To ensure consistency, the most practical approach will be to design one assessment evaluation which can be tailored to each theme and component within the CRMP Strategic Framework, as required.

This guidance is designed to be applied to both development and delivery of the CRMP – as such, it can be applied in isolation to specific components of the CRMP during development, and to the entire CRMP when evaluating delivery, as well as a combination of the two.

In terms of the whole CRMP, the evaluation should begin with a clear statement of what is to be evaluated so that this can be shared and consulted upon. It should contain timeframes, resource plans, funding sources, objectives, and any other relevant information.

The following should then be considered as potential parameters of the evaluation:

- Effectiveness of project structure and delivery mechanisms

- Outputs and targets

- Risk

- Changing environment or context

- Sustainability of activities

- Lessons learned

- Value for money

- Highlighted examples of good practice

- Practical recommendations for future development

- Identification of outcomes

- (Net) economic and social impacts

- Opportunities identified

In order to help this process, ask:

- Where to draw the line regarding each of these parameters, and give the rationale for doing so.

- What is the purpose of each parameter: what does it measure and why is that important?

- What can be excluded, and what is the rationale to decide this?

In this step of the evaluation, it may help to look at already existing evidence available from monitoring (particularly any gaps), or to the recommendations made by prior evaluations. These sources can help to refine parameters and settle on an agreed scope.

To visualise this scope in terms of all the expected inputs and outputs, it is advisable to create a simple logic model, as shown in Step 2.

Step 2: Create a Logic Model

A logic model illustrates an overview of a project or process in a simple one-page diagram. It tells the story of how project activities will lead to its anticipated results, in other words, the theory of change.

The benefit of producing a simple logic model is that it will provide a clear overview that can be used to explain the CRMP and how objectives, processes, outputs, and other elements link together. It provides a useful monitoring and assurance evaluation tool, and it highlights assumptions, all of which should be clearly defined – and identifies those that need to be treated as a risk.

In terms of the CRMP, a logic model should cover the following (see Annexe Two for a worked example):

Context – demonstrates why the CRMP has been developed and which stakeholders have been identified as the frameworks focus. In other words: what is the issue, and who requires the help. This can be covered outside of the logic model in the project scope.

Inputs – describes what will be invested in the development of the CRMP: things like funding (cost and source), staff, access to data, technology, equipment, buildings, and any other resources utilised.

Activities – describes the activities necessary to develop the CRMP: what will happen as part of the CRMP development, and who will be involved in delivery.

Outputs – these will document the results of past activities resulting from the previous CRMP and suggest further needs from the CRMP to refine and improve these results: things like use of ‘Targeted Safe and Well’ checks to mitigate specific community risk.

Outcomes – these will be the intended results from delivering the CRMP’s outputs, and the benefits that are expected to be realised from these outcomes: things like improved service, or community safety. It is useful to quantify any agreed standards of improvement, such as: reduce fire-setting by 30% over the next 24 months.

Impact – this will capture the expected project’s contribution to social, economic, and environment consequences of the outcomes achieved: for example, safer communities or fewer fires. Again, specific targets that have been agreed as part of wider strategies should be identified. It is also helpful to describe here the expected geographic and demographic distribution of the anticipated impacts.

Assumptions – every project works from a series of assumptions. It is important to be clear about what these are, and to have them written down clearly so that risks can be managed. Assumptions should consider things such as wider issues that may impact on the success of the project (an example being the Covid-19 pandemic), any relevant dependencies or constraints (things like the CRMP’s dependence on funding, or other projects that can impact the CRMP’s development), and facts that are fundamental to the project (for example risk management is an issue that will need to be considered by every FRS, irrespective of a CRMP).

Logic models are very useful in visualising the linkages between a process evaluation, and outcomes and impact evaluations, as they can capture where each evaluation type fits in the model.

See Annexe Two for a Logic Model template.

Action PointThink about the scope of the assurance evaluation: what you need to achieve in terms of the CRMP and how to get there. Write this down in a document that can be shared. Consider:

Capture this visually by creating a logic model to illustrate the evaluation scope. Use the simple logic model in Annex Two: add to it any ideas relating to the overall CRMP. In addition, to help measure progress, keep the ‘Risk Register’ updated. (For consistency of understanding, use a Risk Register that is in current use within your organisation and your programme is best to use.) In terms of focusing on a ‘whole’ process evaluation, you will find that many of the stakeholders will be the same throughout Creating one over-arching model for the whole CRMP Framework (including all its themes and components) will be important to ensure a consistent approach, and in visually capturing the links between activities. However, although inputs and outputs might be common across the whole CRMP framework, there will be some that are linked to specific components or themes. These can be captured in the logic model as headlines, and then, if it is helpful, you could drill down into these through the creation of sub-logic models that relate to a particular theme or component. |

Learning PointFollowing this step, you should understand how important it is to identify and engage both internal and external stakeholders. In addition, you can begin to construct an idea of how you will communicate with, and use input from, these stakeholders. |

Step 3: Engage Stakeholders

Stakeholders (both internal and external) are critical to the success of an assurance evaluation. A decision needs to be made as to who these stakeholders might be and how they might best be utilised – either to be directly involved in evaluation design, or to be sounding boards as the assurance evaluation progresses. In terms of their engagement with the evaluation, it is important to make clear to a stakeholder their role as either ‘Sounding Board’ or ‘Contributor to Design’, so that expectations are managed.

Stakeholders can helpfully provide the following:

- Design input

- Development of assurance evaluation questions

- Expert guidance

- Consultation

- Co-creation

- Sponsorship of the project

- Dissemination

The next step is to begin to identify which stakeholders fit into which categories and understand their interests and expectations. Key stakeholders can be categorised into three groups (however, each may not belong to just one of these groups):

- Individuals involved in the CRMP (Project teams, FRS staff, communities, local businesses, even other emergency services), for example, through informal engagement and questions community members may be involved in the development stage.

- Individuals who are influenced by the CRMP (Intended beneficiaries such as the public, other agencies, FRS staff).

- Individuals who may use the CRMP assurance evaluation findings (governing bodies, strategic managers, HMICFRS, external partners).

It is important to identify and include stakeholders early in the design process. For example, there may be a need to negotiate access to information that can help define KPIs. Also, in considering the perspectives and interests of stakeholders as early as possible, this can support better co-creation and increases the probability of the evaluation findings being used to initiate change.

Step 4: Create a Stakeholder Map

A stakeholder map lists each stakeholder associated with the CRMP, defines their relationship to the evaluation, captures their expertise, identifies beneficiaries, documents their intended use of the evaluation, and assigns agreed inputs. As with a logic model, it provides a visual record of this step that can be shared and provides evidence of collaboration. This becomes a useful tool in assurance evaluation at every stage of CRMP development. See Annexe Three for a stakeholder map template. N.B: while it has been placed here as Step 4 – it is recommended that Step 3 and Step 4 are best conducted in tandem.

A complete map can be difficult to plot without first engaging some stakeholders; however, a more comprehensive stakeholder map is a useful resource when trying to understand the range of stakeholders and evaluate how well the CRMP process engaged them.

Step 5: Agree Resource

Learning PointThis step should offer a better understanding of how to calculate the resource implications related to the assurance evaluation in terms of personnel and funding required. |

Evaluations need to be proportionate in their relationship with the project, process, or programme in question – in this case the CRMP. Many organisations neglect to conduct an evaluation of any type within their projects due to cost and resource implications; however, it is all about scale. It would be rare to spend as much on the assurance evaluation of a project as is spent on the cost of the project itself – but it is essential to spend something.

Budgeting nothing for evaluation (in other words, not evaluating at all), can be considered a false economy for it will almost certainly result in misspending or overspending elsewhere, and it will not help to inform sustainability, especially if a project is long-term, or might be repeated and replicated more widely.

Evaluations can also help to attract funding:

“If an evaluation whose cost is equal to 5 per cent of the grants under review delivers results that are 10 per cent better result of the evaluative information,

it is a worthwhile expenditure.”

It is true that all types of evaluations can be time-consuming and expensive, therefore, to ensure the CRMP assurance evaluation is proportional, an assessment is required to determine scale and scope. This should include consideration of such things as:

- Attributed funds.

- Resources available.

- In-kind support (for example the re-deployment of staff, the use of external premises, knowledge, or technology).

- Governance, approval, and reporting processes.

- Strategic imperatives.

- Timeline for implementation and completion.

When assessing this, consider and review the following for the CRMP Assurance Evaluation:

- The clarity regarding what needs to be evaluated re each component of the CRMP

- Clearly stated reasons for the assurance evaluation : its benefit and purpose.

- Ensuring that the scope of the assurance evaluation has been consulted upon and agreed.

- A stated consideration of how useful (and used – and by whom) will the CRMP process assurance evaluation be, and how this will be measured?

- The extent to which there is leadership buy-in regarding strategic support and need for the evaluation.

- Whether adequate resources to fund the evaluation are available.

- How much the results might influence the future shape of the CRMP and resulting resource allocation.

Action PointUse the planning (the scope and logic model) to determine what type of evaluation is needed. Work with the organisation and stakeholders to agree and set resources and a budget that can meet requirements. There is no hard and fast rule regarding ‘spend’ on evaluations, but as a general guide to evaluation budgets, assume that 5-10% of an overall project budget should be reserved for evaluation purposes. |

Step 6: Develop the Assurance Evaluation Questions

As many stakeholders as possible should be consulted in developing evaluation questions to ensure all needs are met, and to ensure that the focus is on process questions, not on questions of outcome, or impact. In other words, making sure that everyone understands that this evaluation is of the process of developing the CRMP only.

As a reminder, the process assurance evaluation will address the extent to which the CRMP has been (or is being) implemented as planned and is reaching its intended populations. This inevitably links to outcomes and impacts, in that, the questions will be based on the specific desired outputs, outcomes, and impact – but these are not the target of the evaluation, merely indicators to be used to evaluate the process.

To focus the process assurance evaluation more precisely will require consideration of the types of indicators to be used to provide evidence of the process (how you will know what has happened). The following types of indicators will provide useful guidelines about what information should be collected. In other words, these are the data sources needed to demonstrate progress or change.

- Process indicators (includes inputs and outputs): These measure the extent to which planned activities took place. Examples will include the tracking of intended meetings relating to the CRMP, the distribution of timetabled update reports, the level to which those involved in the design contributed feedback about the evolution of the process, and other such indicators.

- Input indicators – these measure the quantity, quality, and timeliness of the contributions necessary to enable the CRMP to be implemented (such as funding, staff, other resources, key partners and collaborators, infrastructure, data availability and access, ability to engage relevant parties).

- Output indicators – these are a quantitative measure of activities, for example an observed reduction in the number of dwelling fires. The process evaluation will review whether the right measures were put in place for this to be recorded effectively.

In developing assurance evaluation questions for the CRMP based on the above indicators, the following should be considered:

- The CRMP Strategic Framework logic model should be consulted to construct questions on inputs and outputs.

- Questions should relate to the specific stages of development of the CRMP (for example the planning, implementation, or completion stage)

- Any previous evaluations that may relate to the CRMP and the activities associated with it should be reviewed to help generate any themes or questions.

- Based on the questions, the decisions that may need to be taken should be identified: for example, if the CRMP stakeholder base needs to be broadened, questions might be asked of current stakeholders regarding any barriers associated with their engagement.

- Although process assurance questions are not focused on measuring outputs or impacts, questions that link how the process can measure these will need to be asked. For example, if a desired outcome is to record fewer incidents related to hazards, are the appropriate questions in place to measure this?

| Possible Questions |

| 1. Was the CRMP development and delivery comprehensive, appropriate and effective?

2. Were CRMP partnership arrangements satisfactory? 3. Which stakeholders were engaged? Were these the right stakeholders? Are there ‘missing’ stakeholders? If so, who? 4. How has leadership and organisational structure affected delivery of the CRMP? 5. What is the learning and best practice from the process? 6. How does the environment or context within the Fire and Rescue Services affect delivery? 7. How does delivery differ or compare across individual Fire and Rescue Services of the same Family Group? 8. What budget was set for the CRMP and for which activities? 9. Were there sufficient resources in place to deliver the CRMP? If not, what would have helped? 10. What outputs were and are expected? 11. What were and are the experiences and perceptions of those engaging in the development of the CRMP? 12. Does the CRMP work in practice? How and why does it or does it not work? 13. What were and are the barriers and facilitators for organisations? 14. How could the barriers be minimised, and facilitators maximised? 15. Are current CRMP KPIs realistic and achievable? 16. What opportunities did the CRMP highlight? |

| Possible Indicators |

| 1. The number of stakeholders involved in the CRMP.

2. Number of stakeholder types (internal and external) involved. 3. Number of CRMP milestones achieved or not achieved. 4. Participant views on the process of delivering the CRMP. 5. Stakeholder experiences of the development of the CRMP, measured against their expectations. 6. What variance was there between budget and spend? 7. The extent that resource allocation differed from planned allocation. 8. Level of input from stakeholders 9. Number of ‘intended’ outputs and outcomes achieved. 10. Number of ‘intended outputs and outcomes not achieved. 11. Number of ‘unexpected’ outputs and outcomes achieved. 12. Number (and types) of barriers encountered. 13. Impact on outputs of barriers. 14. Number of KPIs met. 15. Number of KPIs not met (and by how much?). 16. Number and types of opportunities identified. |

Step 7: Asking the Evaluation Questions

This step, together with Step 8, relates to, and interacts with, the gathering and analysis of process assurance evaluation data.

This headline guidance is designed to cover an aspect of process assurance evaluation; however, it should be used in conjunction with the much more ‘data specific’ guidance contained in the NFCC CRMP ‘Data and Business Intelligence’ Guidance, which forms a component of the CRMP Strategic Framework, and which covers in-depth advice on such issues as:

- Collection of data

- Data quality

- Data ethics

- Data protection

- Organising data

- Data cleaning and validation

- Data analysis

Once the required questions and indicators have been developed, a decision will need to be made regarding the method(s) to be employed in seeking responses to those questions and providing evidence. This can be a process that requires a degree of technical expertise to be carried out, for example if it requires research (in one form or another).

The most practicable and reliable methods need to be identified, and these include how the data will be collected. This may be conditioned by resource availability, knowing who will use the data and for what, or by responding to stakeholder expectations. It would be advisable to develop a data collection plan that includes the following:

- The themes to be measured and how they will be measured (these can be grouped together using the questions and indicators from Step 4).

- The dates and timeframe for the collection of data (or when your research is to be conducted) and the timeline for doing so.

- How will data be collected (will it be qualitative or quantitative or both – see below for further information on these methods)?

- Where to collect data and why? There will be stakeholders and participant groups that can contribute to the whole CRMP process; however, there will also be specific aspects of the CRMP that will require targeted ‘interest’ groups and individuals. The various groups, subgroups, and representative samples of the population of interest should be classified along with clear designation of to which specific aspects or elements of the CRMP comment by these groups is invited.

- Ethical implications involved in data collection (GDPR, data protection, anonymity, confidentiality, and informed consent) must be considered.

- Inclusion of plans for the collection of data from under-represented individuals and groups must be made. Attention needs to be paid to gathering demographic data and ‘protected characteristics’ data relating to equality, diversity, and inclusion.

Research Methods

Once the process assurance evaluation questions have been determined, a decision will need to be made regarding the type(s) of research methodology that will be applied to ask these questions. This could be a decision to select just one method (such as surveys), or a combination of methods (surveys and interviews). The decision will depend on the types of outputs required from the evaluation, using both quantitative data (measuring specifics of things like how much or how many), and qualitative data (asking how and why), which will entail deeper investigation.

The NFCC CRMP Data and Business will provide further support on this; however, the following table illustrates the differences between a quantitative and a qualitative approach to evaluations.

NB: Good practice is to overlap or layer both qualitative and quantitative data within an evaluation. The differences are important to understand so that the advantages of different methods can be recognised, and the strengths of each approach can be effectively pursued in tandem. When answering evaluation questions, depending too heavily on qualitative or quantitative data to the exclusion of the other will result in compromised answers. The NFCC Stakeholder and Public Engagement Guidance, and its associated video, can assist in how to manage data received from consultation and in choosing methods.

| Difference between Quantitative and Qualitative Research Approaches | ||

| Difference in… | Quantitative | Qualitative |

| Meaning | Quantitative research seeks to quantify a phenomenon. It is more structured, objective, and helps reduce bias. | Qualitative research is descriptive and is used to discover details that help explain things. It conveys the richness and depth of peoples’ thoughts and experiences. |

| Data type | Data that can easily be measured or quantified. For example, the number of people who will be placed in danger by an identified risk. | Data that represent opinions or feelings and cannot be represented by a numerical statistic such as an average. For example, what type of danger is posed by an identified risk, to whom, and what will be the result. |

| How such data is used | To numerically measure – things like who, what, when, how much, how many, how often. | To analyse how and why. |

| Objectives | To assess reach, causality, and to reach conclusions that can be generalised. For example: 50% of people in x location will be in danger due to the identified risk.

|

To understand processes, behaviours, and conditions as perceived by groups or individuals being studied. For example, the identified risk will result in ‘this type’ of injury but could be mitigated by ‘xyz’ actions. |

| Recommended data collection | Standardised interviews / Surveys / Questionnaires. | In-depth open interviews / participant observation / focus groups / workshops. |

| Analysis | Predominantly statistical analysis (using appropriate software) – often generalised to make wider comments on coverage and impact. | Triangulation – using multiple data sources to produce understanding – often using quotations and case studies to substantiate and illustrate findings. |

| Types of findings / responses | 25% of survey respondents stated that they felt they had participated in a thorough consultation in the development of the CRMP’s Equality Impact Assessment.

40% of respondents stated that the budget allocated to the EIA had not been spent as they had expected. |

There was evidence that one identifiable group of respondents stated that the EQI did not cover the themes required to ensure that they were satisfied with its final assessment.

One budget holder said that due to a change in plans caused by a reduction in the original budget allocated to the EIA, the number of stakeholders involved had to be reduced. |

Who should ask the questions?

Another aspect of process assurance evaluation (and of the research element in particular) that will need to be considered is, who should carry out the evaluation, and to what extent are objective, independent results required: should this be the role of internal FRS staff, an external contractor, or a combination of the two?

There are several factors to consider when making this decision. For some projects and programmes, it may be a compulsory requirement that an external evaluator completes the task. However, sometimes evaluations are conducted internally.

It may be tempting to opt for the less costly route of using an internal member of staff to conduct the CRMP evaluation, however, if it adds to an individual’s workload, and they do not have the necessary expertise, it may be a false economy and could run the risk of poor evaluation.

Another option would be to include both internal and external individuals for the evaluation. For instance, an external evaluator could be contracted to support some of the technical aspects of the evaluation (the research methods). This could combine the benefits of external expertise without losing the advantage of access to insider knowledge and understanding.

The following table outlines some of the benefits and limitations related to commissioning an external evaluator, as opposed to using internal staff.

|

Internal |

External |

|

Benefits |

|

| Cost – less costly.

Perspective – will have more understanding of the project or programme and organisational environment. Working relationships – with staff and stakeholders are already established. |

Expertise – will have skills and knowledge in evaluation methods and practice.

Credibility – brings in an outsider’s perspective and is therefore more objective. Time – will have more time to dedicate to the evaluation. |

| Limitations | |

| Expertise – may lack evaluation skills and knowledge of methods and practices.

Credibility – it may be more difficult to be objective, and the perception of not being objective may apply. Time – may have limited time to dedicate to evaluation. |

Cost – it may be more costly to hire external evaluators.

Perspective – may not have as much understanding of project or programme and organisational environment.

|

Step 8: Analysing your Responses

As previously stated, this step contains a brief overview of guidance in relation to data analysis. As with the previous step, more detailed support can be obtained through consulting the NFCC CRMP ‘Data and Business Intelligence’ Guidance.

The logical step following the collection of data is to analyse and interpret that data. This usually takes the form of entering (or capturing) data, checking quality, ensuring consistency, and analysing the data to identify your evaluation results.

Analysing process assurance evaluation data is a way of creating individual stories and making them relevant to the stakeholders involved in the CRMP. The types of analyses will depend on the types of data (qualitative or quantitative) to be analysed, the evaluation question being asked, and how (and to whom) the data is to be presented. It may also depend on who is analysing the data (internal or external individuals). The following provides some ideas on the types of analysis that could be used to undertake evaluation assurance on the development of the CRMP.

For the purposes of assurance evaluation of the CRMP process, it will probably suffice to use descriptive statistics in analysing quantitative date. These types of statistics are used to present the data in a meaningful way and one which helps to highlight trends and make sense of patterns. Some of the main types of descriptive analysis methods include:

- Measures of frequency – these are used to display counts, percentages, or frequencies. Researchers will apply this method when they want to showcase how often a response is given. For example: 65% of respondents to the survey on the effectiveness of hazard identification believe that the implementation of the CRMP Strategic Framework has resulted in fewer incidents over the last 12 months.

- Measures of central tendency – these relate to the mean, median, or mode This method is often used to demonstrate the distribution of responses. Researchers use this when they want to highlight the most commonly or averagely indicated response. For example: the mean number of hazard incidents over the last 12 months to which the CRMP has been applied (as recorded by respondents) is eight.

- Measures of variation – these relate to the spread of responses and can include range, variance, and standard deviation. For example: the number of hazard incidents over the last 12 months in which the CRMP has been applied, (as reported by individual Fire and Rescue Services), ranges from four to eighteen. This is most usually applied when evaluating the outputs of the CRMP, rather than in evaluating the process by which it has been developed.

‘Inferential statistics’ is another method that can be used to make predictions about a larger population following data analysis of the sample of respondents. For example, if in a survey on hazards 300 out of 500 respondents in a given region state that they believe that because of the CRMP, the likelihood of hazards has been reduced, researchers might then use inferential statistics to reason that about 60% of all people within that same region believe the same. Inferential statistics require more sophisticated application than descriptive statistics, and are often used when researchers want something beyond absolute numbers to understand the relationship between variables.

It should be noted that data analysis is a skill that requires some learning in terms of understanding and application.

However, it is worth considering some of the commonly used methods for analysis in research that might be applied in relation to evaluating data derived from the CRMP – particularly by evaluators with the necessary expertise.

Some of the easier to grasp methods include:

- Correlation – this relates to the relationship between two or more variables, for example it may be instructive to analyse the relationship between the degree of a stakeholder’s involvement in developing the CRMP with their perception of the impact the CRMP has had on hazard incidents.

- Cross-tabulation – this can be used to analyse the relationship between multiple response variables. It is used to understand more granular responses to a question. For example, in evaluating the degree to which the public feels it has been consulted on the CRMP, it would be helpful to disaggregate responses in terms of demographics such as age, gender, and ethnicity.

- Content Analysis - this is a widely accepted and used technique employed in analysing information from text and images and, for example, would be a useful tool to use in reviewing documents relating to the development of the CRMP.

- Narrative Analysis – this method is used to analyse content gathered from sources such as personal interviews, observation, focus groups, and surveys. Experiences and opinions shared by individuals are always shaped by personal experiences and these can be interpreted in many ways by researchers. For example, conducting an interview with semi-structured questions might elicit more reflective responses than running an interview with very fixed questions.

Key Considerations in Analysing Data Related to Assurance Evaluation of the CRMP process

- Evaluators and researchers must have the necessary skills to analyse the data. Competencies needed for this can be found on the Competencies Framework. Ideally, those charged with the task should possess more than a basic understanding of the rationale of selecting one analytical method over the other to obtain better data insights.

- Getting statistical advice from an expert at the beginning of analysis will help to ensure that the research design is right in terms of how to ask questions, what to ask, and in framing the potential types of analysis.

- The primary aim of data research and analysis in relation to evaluation is to arrive at unbiased insights. Constructing an unbiased evaluation is essential. The key to good data analysis is to be open-minded in that analysis – to reflect what people are saying, and to tell their stories.

Learning PointUnderstand how to ensure that findings are communicated in a way that targets a variety of audiences, and learn about the different types of reporting formats. |

Step 9: Report and Disseminate

All the actions and results of the previous steps are captured in the reporting and dissemination phase. This is where the evaluation findings are shared, audiences engage with the findings, decisions are influenced, proposed change in delivery is documented, outcomes and impacts are captured (again – these refer to the process of developing the CRMP, not for the impacts of the operational activities that may result), and recommendations are made.

Previous evidence / analysis of interdependencies within the process should be referenced in terms of good practice; however, this type of information should not be used to make a judgment on outcomes / impact.

Emphasis should be placed on communication and dissemination with a view to increasing knowledge, raising awareness, influencing behaviour, and assisting decision-making. The main challenge is to ensure that any interpretation of the findings is anchored to the original research

questions. To do this, original questions should be grouped into themes, with the linked findings from analysis and this information used to create the materials to communicate your findings. Guidance on how to present findings to remove unintended bias can be found in the videos that supplement the Stakeholder and Public Engagement Guidance.

Presentation of process assurance evaluation findings can take many forms, such as a written report, slide show presentation, infographics, or an informational video. Visual aids can be powerful methods for communicating evaluation results. Make results available to various stakeholders and audiences; tailor what is disseminated to their specific interest in the evaluation and how they plan to use the results.

Review recommendations with stakeholders to identify and agree actionable outcomes and discuss what has been learned from conducting the process assurance evaluation and agree next steps to incorporate and use the results. Prioritise actions arising from the recommendations and co-develop an action plan with those people and groups who will be impacted by the CRMP, the evaluation or both.

Annexe 1: Evaluation types

Outcomes Evaluation

Outcomes are the developmental changes observed and experienced between the completion of outputs and the achievement of impact. They are the changes that can be attributed to organisational or project activities. These can be intended outcomes or unintended outcomes, they may be positive or negative, and they will be linked to any pertinent impacts. For example, one of the intended outcomes from ‘Hazard Identification’ could be that a ‘particular hazard’ that might impact on a specific vulnerable group is identified, and this hazard is mitigated. Outcome evaluations move away from the approach of assessing project results against project objectives and move towards an assessment of how these results contribute to a change in development conditions.

Outcome evaluation measurements include the following:

- whether an outcome has been achieved or progress made towards it

- how, why and under what circumstances the outcome has changed

- contributions to the progress towards or achievement of the outcome, and

- strategies employed in pursuing the outcome

As well as planned changes, projects often produce outcomes that are unexpected, so it is important that information is collected in a way that that can provide evidence of this. Longer-term outcomes may often be less easy to evaluate than intermediate ones. The CRMP provides a service which is a link in a chain of services, or part of a multi-functional agency intervention. For these processes, final outcomes in one part (such as Hazard Identification) may be intermediate outcomes as part of the whole CRMP.

Key considerationOutcome indicators measure the results generated by programme outputs. They often correspond to any change in the behaviour of people or of organisations as a result of the project/programme. |

Impact Evaluation

Impact evaluations are designed to value the results of outcomes. For example, if an intended outcome from ‘Hazard Identification’ is to mitigate the risk to a vulnerable group (the outcome), the impact might be that less people from that vulnerable groups are hospitalised, something that can be monetised in terms of less cost to the NHS (impact).

Impact evaluations assist with:

- assessing outcomes for groups directly and indirectly affected by projects and processes

- providing an explanation of how the outcomes come about and how they vary in different contexts

- providing evidence on the impact and economic and social value generated by projects, and

- ensuring robustness and sensitivity

Essentially, this involves comparison with:

-

- baselines – the prevailing levels and trends that were identified prior to the project or processes commencing, this is especially relevant when reviewing a previous CRMP

- counterfactuals – the levels and trends that might have been seen in the outcomes without the Strategic Framework being in place

- further analysis of research evidence, secondary data sources and any benchmarks relating to the outcome and its drivers

- looking forward in order to measure persistence effects; wider take-up; sustainability. This involves monitoring beyond a project’s lifetime to understand the actual ‘reach’ of the project, the ‘potential’ reach of the project (including such things as possible deployment to other areas), and future requirements in terms of things such as funding and environmental considerations.

Key considerationImpact indicators measure the quality and quantity of results generated by project and programme outputs and outcomes, such as measurable change in quality of training provision, reduced incidence of absence, increased income for women, increased investment in training, decrease in drop-out from training provision. |